DragGAN AI Photo Editor

DragGAN is based on a generative adversarial network (GAN), a machine-learning model that can create realistic synthetic images. GANs work by having two neural networks compete against each other in a process that improves their ability to generate increasingly authentic images.

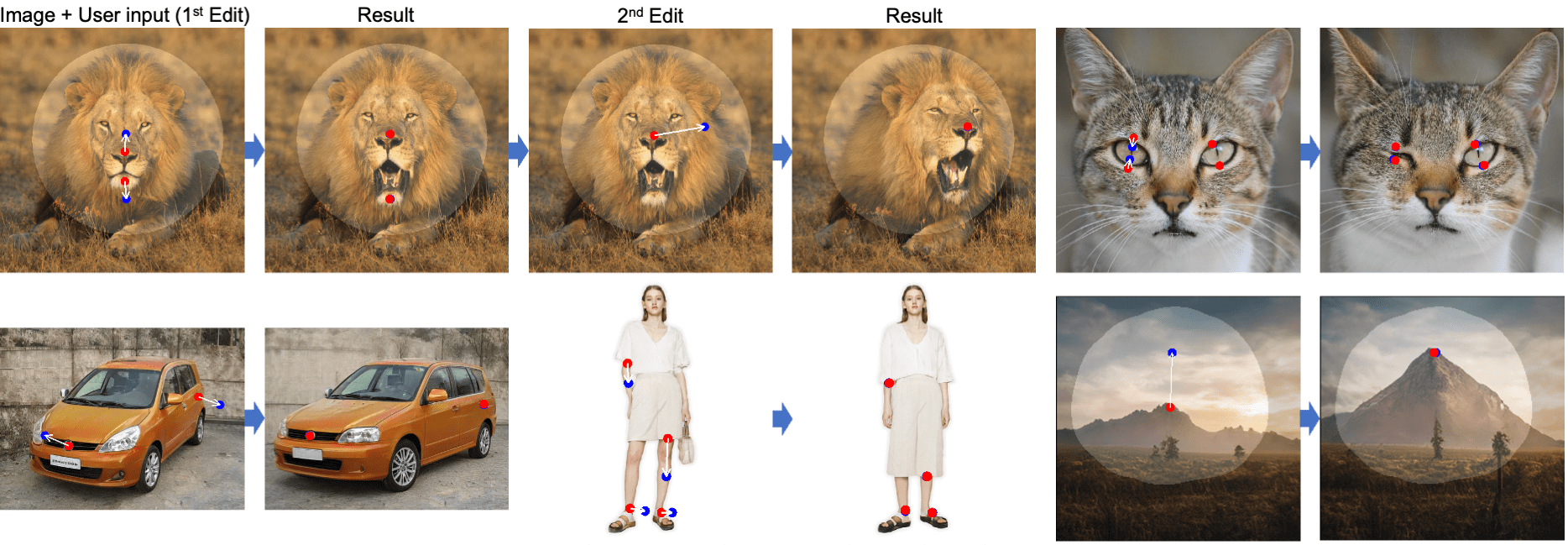

DragGAN presents an intuitive method for simple yet impactful image manipulation. With just a few drag gestures on an image, users can generate natural-looking changes that significantly alter the photo’s content.

Some even say that DrgnGAN is the Photoshop Killer.

What is DragGAN (Source from DragGAN)

Also read: How to Extend Background in Photoshop? >

What is DragGAN AI Photo Editor?

| Feature | Description |

| Name | DragGAN |

| Developer | Developed by researchers at the Max Planck Institute |

| Availability | Code will be released in June on Github |

| Interface | Allows users to intuitively drag points within an image to target locations |

| Benefits | Provides more powerful image transformations than Photoshop’s Warp tool |

| Potential Uses | Image editing, generating photorealistic images, 3D image manipulation |

The technique, developed by researchers at the Saarbrücken Research Centre for Visual Computing, the Max Planck Institute for Computer Science, MIT CSAIL, and Google, leverages generative adversarial networks to realistically edit photographs based on straightforward point-and-drag controls.

By dragging specific points within an image to new locations, DragGAN’s underlying GAN model is precisely guided to produce the desired semantic transformations – modifying attributes like age, hair style, object shape, and more – while respecting image context to generate high-fidelity results.

The simple yet powerful drag-based interface enables users without expertise in image editing to easily explore a wide range of realistic edits and transformations, offering an innovative solution for visual content creation.

DragGAN’s simple drag-based interface enables it to generate photo-realistic edited images across a variety of transformations, thanks to the clever pixel synthesis and masking capabilities of its underlying GAN model.

DragGAN Use Cases

DragGAN enables users to make photo-realistic edits by simply dragging points within an image. As long as the requested transformations correspond to attributes the GAN was trained on, such as people, animals, vehicles, and scenes, DragGAN can produce real results.

Users select points in the image and drag them to new locations. DragGAN tracks these manipulations and generates new images that incorporate the edits while remaining photo-realistic.

DragGAN produces high-quality results even for complex edits by synthesizing new pixels that fill in any gaps created by the transformations. This reduces the manual labor typically required using traditional photo editors.

DragGAN also includes a masking tool that allows users to target only specific image regions for editing while leaving the rest untouched. This gives precise control over which areas to transform.

Example transformations DragGAN can make through its point-and-drag interface include:

- Changing a person’s or animal’s pose by dragging points on the figure

- Reshaping or resizing objects by manipulating points on their contours

- Removing or adding objects by synthesizing pixels that seamlessly fill in empty or embed new areas

- Altering the background by dragging points to transform environmental properties

How DragGAN differs from other image editing software?

Here are some of the key differences between DragGAN and other image editing software:

Simplicity – DragGAN uses a highly intuitive interface that simply requires users to point and drag on the image to control the edits. This is much simpler than using complex menus, sliders, and options in typical image editing software.

Naturalness – Since DragGAN is based on GAN technology, it is able to generate more natural and realistic image transformations compared to conventional algorithms. Other software often produces artificial-looking edits.

Type of edits – DragGAN allows for significant semantic edits that go beyond basic adjustments like brightness, contrast, etc. It can transform attributes like age, hair color, object shape, scene context, etc. This is a more extensive range of edits compared to most image editors.

Ease of use – DragGAN’s simple drag controls make it easier to explore a wide variety of edits and transformations. Users do not need extensive experience with image editing software to create interesting edits.

How to use DragGAN AI Photo Editor?

Here are the steps to use DragGAN for photo editing (Code will be released in June on Github):

Step 1. Go to the DragGAN website.

Step 2. Upload an image you want to edit. You can select an image from your computer or drag and drop an image onto the upload box.

Step 3. Select a point or object on the image you want to manipulate. DragGAN will highlight the selected area.

Step 4. Drag the selected point or object to the desired location. As you drag, the image will update in real-time to show the transformation.

Step 5. Release the mouse or finger to apply the change. DragGAN’s GAN model will then produce a realistic edit based on your manipulation.

Step 6. Continue selecting and dragging points or objects within the image to make additional edits. You can move the same point multiple times to fine-tune the transformation.

Step 7. Use the provided sliders to control the degree or strength of the edit.

Step 8. When you’re happy with your edits, click the “Save” button to export your modified image. You’ll have the option to save the image to your DragGAN account or download it to your device. The original image is also saved, allowing you to revert to it or start a new edit from the same photo.