Stable Diffusion has revolutionized language models in the fast-growing field of artificial intelligence. Language generation must be ethical and regulated as language models advance. Stable Diffusion Negative Prompt helps language models deliver safe, responsible outputs. Stable Diffusion Negative Prompts are explained in this article and how they may be used to guarantee ethical AI usage.

What is Stable Diffusion Negative Prompt Meaning?

Workers in language modelling and artificial intelligence (AI) technologies would do well to familiarize themselves with negative prompts. Negative prompts prevent the model from generating biased, damaging, or improper material by instructing it not to. This article will explain how negative prompts work in Stable Diffusion and how they may be used to maximize AI’s capabilities while following ethical rules. This article will help you understand Stable Diffusion Negative Prompt meaning and role in safe AI use, whether you’re a beginner or an expert.

Also Read: How to Write a Wonderful Stable Diffusion Prompt Syntax? >

Table of Contents: hide

How to Enter Negative Prompts on Stable Diffusion?

Identify What Negative Prompts to Use in Different Situations

What is a Negative Prompt?

A negative prompt is an explicit command given to an AI language model to prevent it from producing potentially offensive or offensive information in its answers. It prevents immoral content from being generated by the AI by indicating what should not be included in the output. A negative cue may, for instance, tell a language model that creates creative tales not to include any violence, explicit language, or prejudice.

Adopting negative cues is vital for encouraging responsible AI usage and reducing the hazards connected with AI-generated material. It’s a potent instrument for making AI technology more trustworthy and dependable by ensuring that AI language models follow ethical rules and cultural norms. Chatbots, customer service software, and content-generating tools are all examples of where language models might benefit from negative cues before being made available to the general public.

Developers and researchers may improve the user experience and avoid conflicts or legal difficulties by including negative cues in AI training and fine-tuning. Negative prompts are an efficient way to filter AI-generated information to match ethical and moral norms, which is essential for the responsible use of AI technology.

What is a Negative Prompt

How to Enter Negative Prompts on Stable Diffusion?

Stable Diffusion is a cutting-edge AI platform that facilitates user communication and robust linguistic models using natural language cues. Here’s how you use Stable Diffusion’s negative hints to shape the AI model’s replies:

Enter Negative Prompts on Stable Diffusion

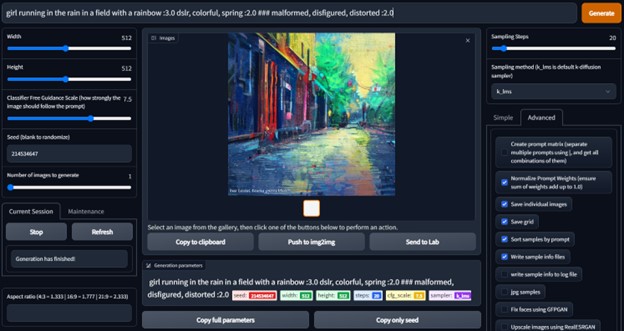

- Access the Stable Diffusion Interface: First, log in to the Stable Diffusion platform or go to the API interface, based on how you plan to use it.

- Define Your Prompt: Write down your prompt carefully, including all the details the AI model will need to get started. Focus on what you want the AI to avoid when giving negative cues. State the limitations or themes to be avoided in the answer.

- Specify Negative limits: In your question, be clear about the negative limits by using words like “do not include,” “avoid,” or “refrain from.” The AI model will learn reaction restrictions from this.

- Test and refine: After typing your negative prompts, test the AI model’s answers to ensure they meet your needs. If required, improve the prompts to guide the AI’s output.

- Monitor and Change: Always monitor how the AI responds and change the messages as needed. Regularly assessing AI-generated material helps the model meet ethical and quality criteria.

Use negative prompts in Stable Diffusion to use AI’s capability without giving up editorial control. Carefully crafted and well-considered instructions may help the AI language model provide more accountable and desired results across various use cases, including content production, virtual assistants, and more.

Identify What Negative Prompts to Use in Different Situations

If you want to ensure the AI-generated material adheres to certain criteria and ethical issues, using negative prompts in Stable Diffusion may help. Some situations and strategies for making the most of unfavourable stimuli are provided below.

- Content Moderation: Negative prompts may assist in filtering out unsuitable, dangerous, or offensive information on social networking platforms and user-generated content websites. A negative cue may, for instance, tell the AI to avoid creating content with hate speech, violence, or sexually explicit content.

- Reducing Bias: AI language models have been shown to produce biased or offensive material, which must be eliminated. More inclusive and equitable results may be achieved via negative cues to train the model to avoid using biased terminology or information that reinforces stereotypes.

- Brand-Specific guidelines: Businesses may match AI-generated content with their brand image and rules via negative suggestions. The AI may be trained to avoid mentioning the company’s rivals’ names or particular terms in its answers, for instance.

- Educational Content: To avoid inaccurate or misleading information, use negative prompts while creating instructional materials using AI. The integrity and reliability of the material may be preserved via training the AI to prevent erroneous assertions and out-of-date facts.

Negative prompts must be adjusted for each application’s unique setting and needs. Users may guarantee that the AI-generated material satisfies their standards and accomplishes their goals by carefully specifying these prompts.

Best Stable Diffusion Negative Prompts

You must use the proper negative hints if you want reliable results from Stable Diffusion. If you want your AI language model to perform better, try using any of these popular and powerful negative prompts:

- Do Not Makeup Information: With this negative request, you can tell the AI not to make up For instructional or informational purposes, when accuracy and integrity are paramount, this is invaluable.

- Avoid Promoting Unsupported Claims: This negative prompt helps stop the AI from making content that supports claims that haven’t been proven or backed up by evidence. It guarantees that the produced text is credible, based on evidence.

- Refrain from Using Sensitive Language: Use this negative prompt to tell the AI not to make material with hurtful or inappropriate language. It’s particularly important when a kind and welcoming tone is required.

- Do Not Provide Medical Advice: Using this negative prompt can stop the model from making medical suggestions or findings in situations where AI-generated material shouldn’t replace professional medical advice.

- Avoid Violent or Harmful Content: This negative prompt ensures the AI doesn’t make violent or harmful content for apps that need filtering content to protect users from harmful or violent content.

- Do not include specific personal information: When privacy is a worry, this negative prompt can stop the AI from making material that includes sensitive personal details.

These carefully constructed negative prompts allow users to tap into Stable Diffusion’s potential while exercising editorial oversight over created material to ensure it meets their requirements and adheres to their rules.

FAQs about Stable Diffusion Negative Prompt

1. What is Stable Diffusion in the context of AI language models?

Stable Diffusion is a method for taming the unpredictability and volatility of AI language model outputs. When users give the model specific instructions or boundaries, the model produces reliable and consistent results.

2. How do negative prompts work in Stable Diffusion?

Stable Diffusion’s negative cues function by guiding the AI language model on the output types to avoid. Users may ensure the model’s output meets their requirements by providing negative cues to prevent it from producing certain materials.

3. Can negative prompts eliminate unwanted content?

Negative cues may reduce unwanted information, but they may not eradicate it. To regulate AI-generated material, cues must be constantly improved. To guarantee the quality, users should evaluate created text.

Final Thought

Users that want more control over AI language models may benefit greatly from Stable Diffusion and negative hints. By providing specific guidelines and limitations, users may mould the produced material to suit their own requirements. Although these methods have flaws, they’re a giant leap ahead in guaranteeing reliable and desired results from AI models. Stable Diffusion and similar technologies will improve as artificial intelligence develops, leading to more precise and personalized outcomes. By adopting these methods, users may tap into the full potential of AI language models in a responsible and novel way.