My Experience & Tips For 1-on-1 User Interviews

Hey, this is Morgan. ![]()

Last week I shared the lessons learned from my users, if you’ve read it, you should know I failed to get feedback from surveys but got some valuable insights via 1-on-1 user interviews instead eventually. Some of you asked for more details about how I did it exactly and tips about 1-on-1 interviews, so I’d like to share my story with you here.

My Experience For 1-on-1 User Interviews

If you’re in the same early stage as mine, compared to sending surveys to all of your users, you honestly need to start conversations with individuals 1 on 1 first, find out who is your biggest target users, and sit down with them over a call. However, it’s not just chatting with people, or it’s not just asking them what they want and what they like & dislike. User interviewing is about trying to get to the core of what a user is trying to do and what their problems are. Trust me, you will get insights beyond your expectations.

Table of Contents: hide

How Did I Conduct The 1-on-1 User Interviews?

How Did I Conduct The 1-on-1 User Interviews?

Before The Interviews

After I identified the users for 1-on-1 interviews, I started to prepare the invitation email and the question list for interviews.

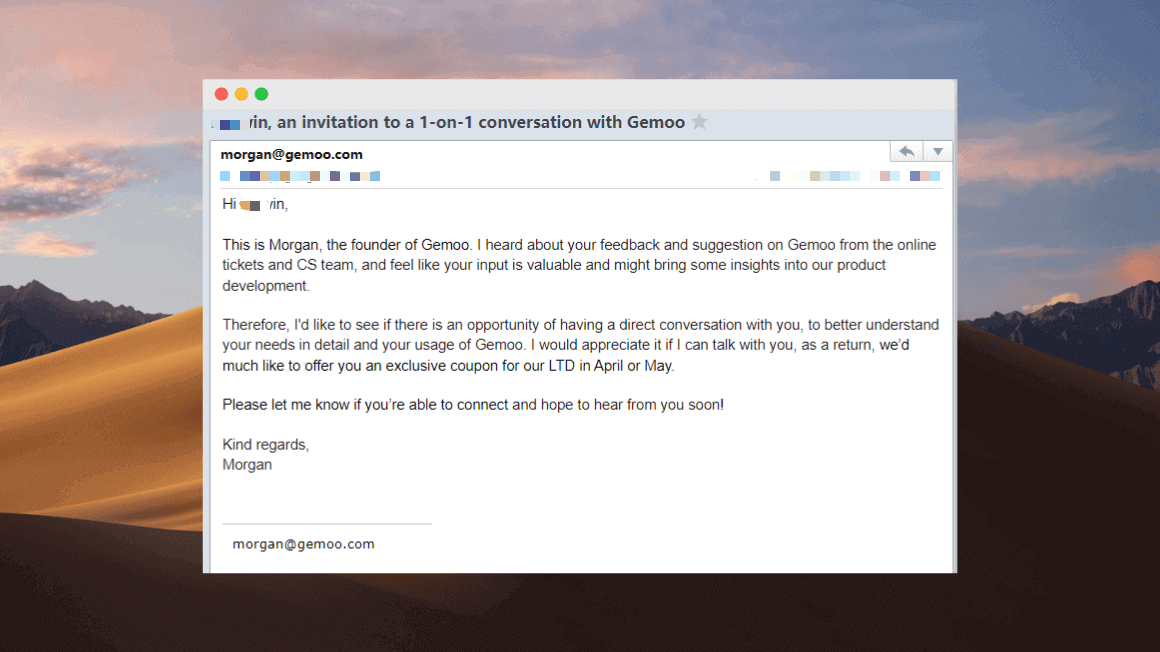

💬 Invitation Email

My subject and the body of the email were customized for each user, based on their back-and-forth with me or my team before. I’d say the subject better be direct and plain, with your purpose and product name included. Also, avoid the word “interview” in both the subject and body of your invitation email, “interview” is often associated with job interviews and can increase anxiety, refer to the interview as a “chat” or ”conversation” instead.

Mine is: xxx (Name), an invitation for a 1-on-1 conversation with Gemoo. The body was made of 3 parts: why this user is chosen; explain what’s the conversation about and why you need to do it in brief; appreciation and rewards.

Invitation Email

I sent them out after I prepared, and 4 out of 6 said they were willing to participate, not bad! Then I discussed with them how they’d like to do it. I intentionally guide them to choose the way of the video call, while 2 of them went for email due to the schedule conflict, which actually is not an ideal method in user interviews and actually caused me a lot of trouble, I‘ll’ expand it below.

🏹 Question List

I started to prepare the question list for each user after I received their replies. My question list was made of two parts: questions that be widely used in usability research, and self-formulated questions according to the different behaviors of certain users.

Choosing questions used in the published research could maximize the assurance that valid data will be obtained through them, while self-formulated questions are well-fit for specific users and could make the interview more customizable.

Besides, I also prepared some foreseeable follow-up questions, for the purpose of getting in-depth insights from their answers.

🎯 The Problem I Got

When the lists were prepared, I sent them to the two users who chose to interview via email. Considering the lessons I learned from it, I highly recommend you don’t do interviews via email if it’s possible.

In my case, I’m still waiting for the reply from one of my email interviewee users. It’s been over a month from the day I sent the question list to him, and I dropped 2 or 3 follow-up emails to ask for updates, but no response at all. I know that there is a high probability that I won’t hear back from him. Honestly, I can totally understand it from his position, no one wants to type long sentences for each question, and even around 15 questions out there, Jesus!

Doing interviews via email will take much more time than those doing via video call, and this puts you in a disadvantage position because you can do nothing but wait for the user’s reply after you send it out, it could be a few days or a couple of weeks, and it could be never! As a result, you cannot ask follow-up questions easily and quickly, even if you send a follow-up email to ask, the answer you get could not reflect the user’s thoughts at that moment. The best time for asking follow-up questions is always right after they answered your interview question.

During The Interviews

One thing I also did before the interviews started: asking them if I was allowed to record the whole conversation for review afterward, and clarified the recording is confidential and will not be shared outside my team. Highly recommend you to do so, in case you miss some crucial details. You may remember the whole story of the conversation, but it’s hard to remember every detail while you’re talking, and sometimes, vital information could be hidden in details.

I usually started interviews with an introduction of myself and some basic/simple questions that users don’t have to think much about. This will break the ice and get them talking and comfortable. For instance, “how does your job role fit in your organization, how is your typical day?” I probably know the answers to these two questions, but I often get additional details that I didn’t already know. This may come in handy for follow-up questions or when I’m putting together the user scenario and to know how or when my product can be used in their working routine.

After the warm-up, I went into the specific questions, which are more related to my product. When users explained their problems using Gemoo, usually I asked them to share the screen with me, to know exactly the problem they got and what are they trying to do, ensuring there is no misunderstanding.

🎯 The Problem I Got

My first interviewee is an experienced user of SaaS products and very talkative, which actually is a perfect interviewee. But I was totally led by him, and the topics were a bit off track. I didn’t find a way to take the conversation back to the topic from him, and I’m not the type to interrupt people when they’re talking.

This made the interview out of my control, it didn’t follow the way I planned, and not all of my prepared questions were answered well. Fortunately, it was not completely useless, I learned insights about LTD that I didn’t know, and this gave me the idea to release LTD in advance.

It’s good to be an active listener and let users talk, but don’t let them take the conversation too far off-topic. There is a balance between polite and dominant, and I need to figure it out.

After The Interviews

📝 Transcribe Recorded Interviews

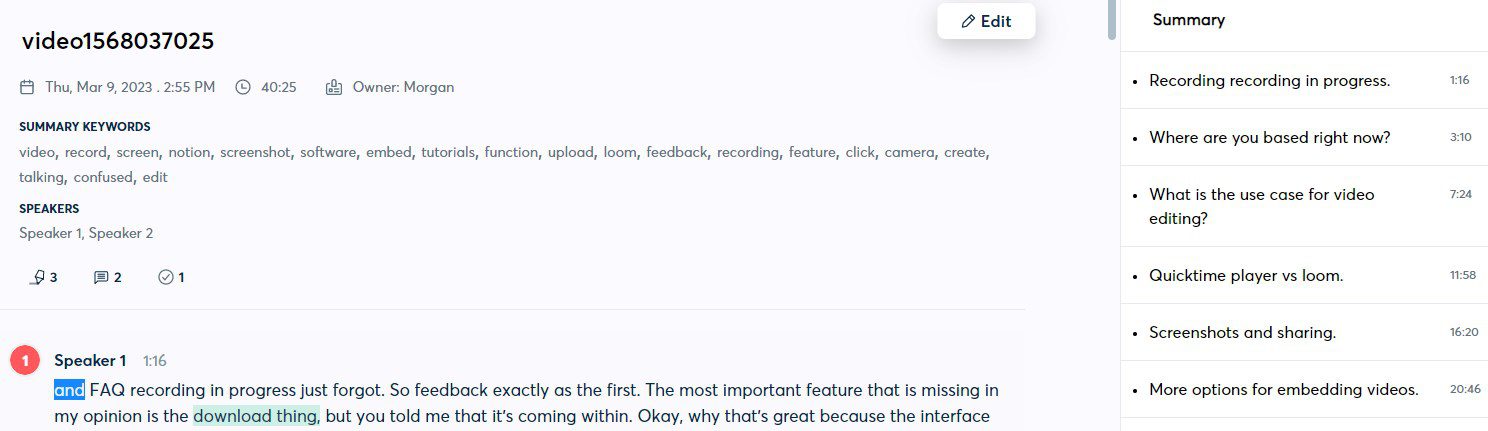

The first thing I did after each interview, was to transcribe the interview video into texts. There’re many tools for interview transcription, which help you to extract texts from video/audio, I used Otter.ai, and so far so good, extraction errors could happen but you can edit to correct them, and it will summarize your interview automatically, but further analysis is still needed, to turn raw data into insights.

Interview Transcription Using Otter.ai

🕵️ Data Analysis

I only finished 2 video interviews now, so how I analyzed data was to find out insights from each user response, then I converted my findings into outputs, which could include: pain points, opportunity areas, user journey, user scenarios, etc.

However, I don’t think it’s a valid and scientific enough method in the long term. As qualitative data is inherently unstructured and thus difficult to analyze, when the number of interviews grows, it will be even harder to make it comparable across interviewee users. My method seems to work now only because I don’t have many interviewees yet.

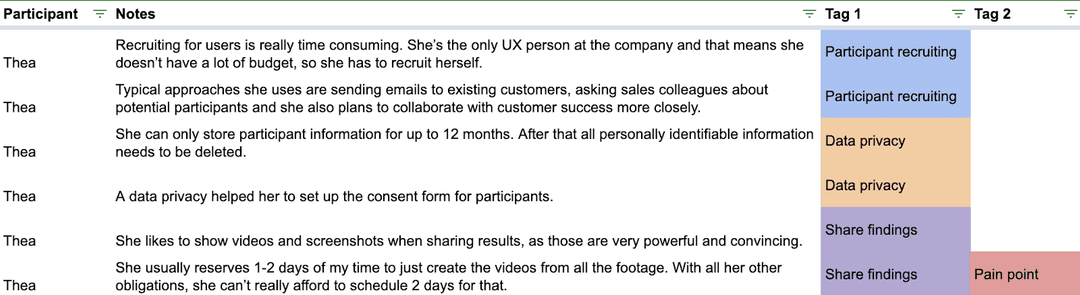

Therefore, I plan to change my method in the future when I get more user interviews, by structuring data into themes. Precisely, labeling user responses to more generic themes using tags. As most of the interview questions are the same (those generated from research), and only several of them are customized according to different users. This will make qualitative data be quantified, by counting the tag numbers from the same question. (This process is called Data Coding in terms of scientific research)

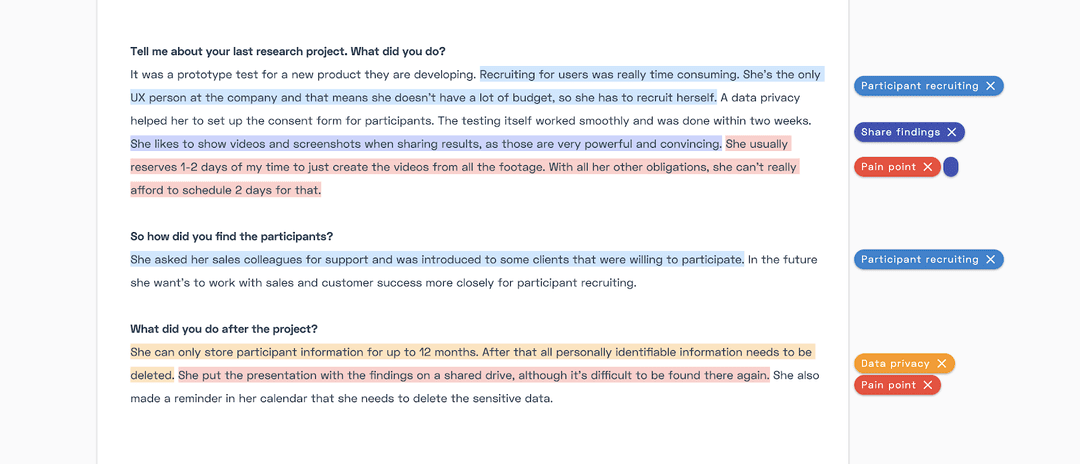

User research tools like Atlas. ti and Coudens can help to do the coding thing. Below is the sample provided by Coudens about labeling user responses using tags, and I plan to try this method in my next interview:

Sample for Labeling User Responses Using Tags

Assigning Tags Directly from Interview Transcription

My Dos & Don’ts For 1-on-1 User Interviews

There’re plenty of tips for user interviews if you google it. Here are my dos & don’ts summarized from personal experience and the mistakes I’ve made.

Dos 😄

- Map out your questions (including some follow-up questions) in advance

- Use the same base set of questions every time: This is for your user interviews to be methodologically valid. Do not wing it and just ask random stuff when you interview users, which could ruin your data.

- Do it via in-person or video call, instead of via email: Some information could be hidden in gestures and facial expressions, you can easily get it if you do interviews in person or via video call. Please avoid doing it via email if it’s possible, it’s time-consuming and hardly gets qualified data, my experience with it is a lesson for you:)

- Ask open-ended questions: Don’t ask yes/no questions, open-ended questions yield expansive responses, and they’re the key to finding out what users are trying to do and what their problems are. For example, you may ask, “do you share video recordings?” thinking that users will respond that they do and then detail what kinds of videos they share and to whom. But it’s actually very likely they’ll just respond with “yes.”

- Ask basic and easy questions first, and go into the specific question afterward: You can miss a lot of key points if you don’t ask basic questions. This often happens because you assume you already know the answer. Don’t be afraid to ask so-called dumb questions, sometimes you can learn something new from the responses.

Don’ts 🙁

- Don’t allow the interviewee to take the conversation too far off track

- Don’t ask leading or directed questions: Leading questions mean providing users with possible answers, and you won’t get honest and real feedback from it.

- Don’t ask users what they want directly: Your questions should not be, “what would you like us to do?” Rather they should be, “what are you trying to do?” If you ask the former one, you can get hundreds of different answers. The purpose of user interviews is to find out what the user’s problems are and what they’re trying to do, then build the future.

- Don’t waste the data you get: There is much information in user interviews, make sure to use the correct data analysis methods to maximize the use of these data.

No expert in this, just wanna share my story with those founders who plan to do user interviews as well. It is not as easy as I imagined before, and there’re methodologies that should be followed. I’m learning while doing this as well. Please DM me via MorganKung7 if you have better advice on it, and I’m open to having a further discussion with you.